Automating Quality Control With Label Studio

We work with a huge variety of data teams. Across all our customers, we've seen over and over that high-quality, well-annotated data is one of the most important investments you can make in machine learning projects. Elevating annotation quality doesn't just enhance model performance in real-world applications—it also slashes training times, reduces computational demands, and cuts costs.

But, realistically, what can your data team do to increase labeling quality? At HumanSignal, this question is our obsession. Our goal is to make Label Studio the most efficient workflow in the industry for generating quality data annotations. Lately, we've focused heavily on adding more automation in two key areas: (1) using ML models and GenAI to automatically suggest and apply labels, and (2) automating end-to-end workflows for quality control and collaboration.

The concept of ground truth is an essential aspect of creating a high-quality dataset. If there are no existing examples of ground truth that can be used to test your model or annotator answers, then there is no way to ensure the reliability of the dataset. The most effective method for establishing a ground truth dataset is to ensure that all annotators are in agreement with the labels assigned to the trial examples. Only then can you be confident in the accuracy and quality of the dataset.

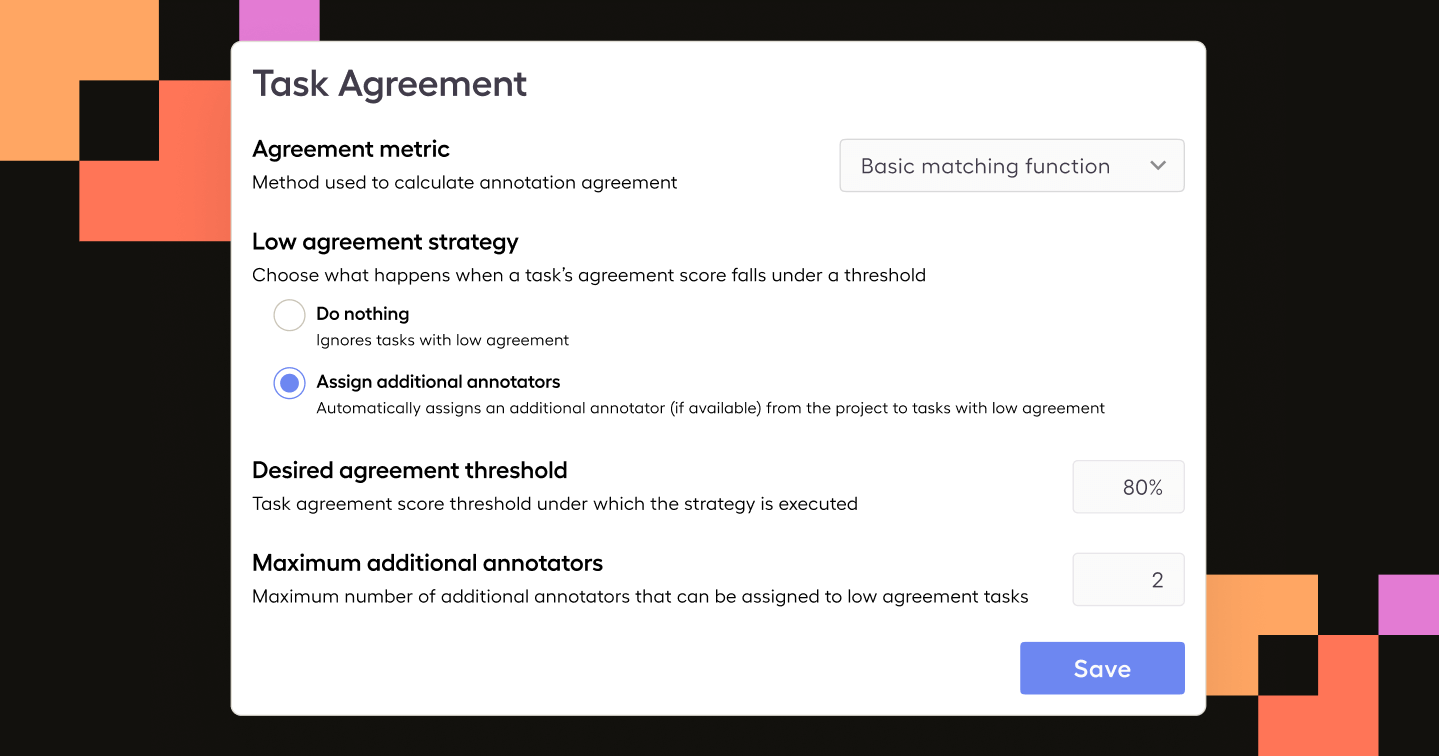

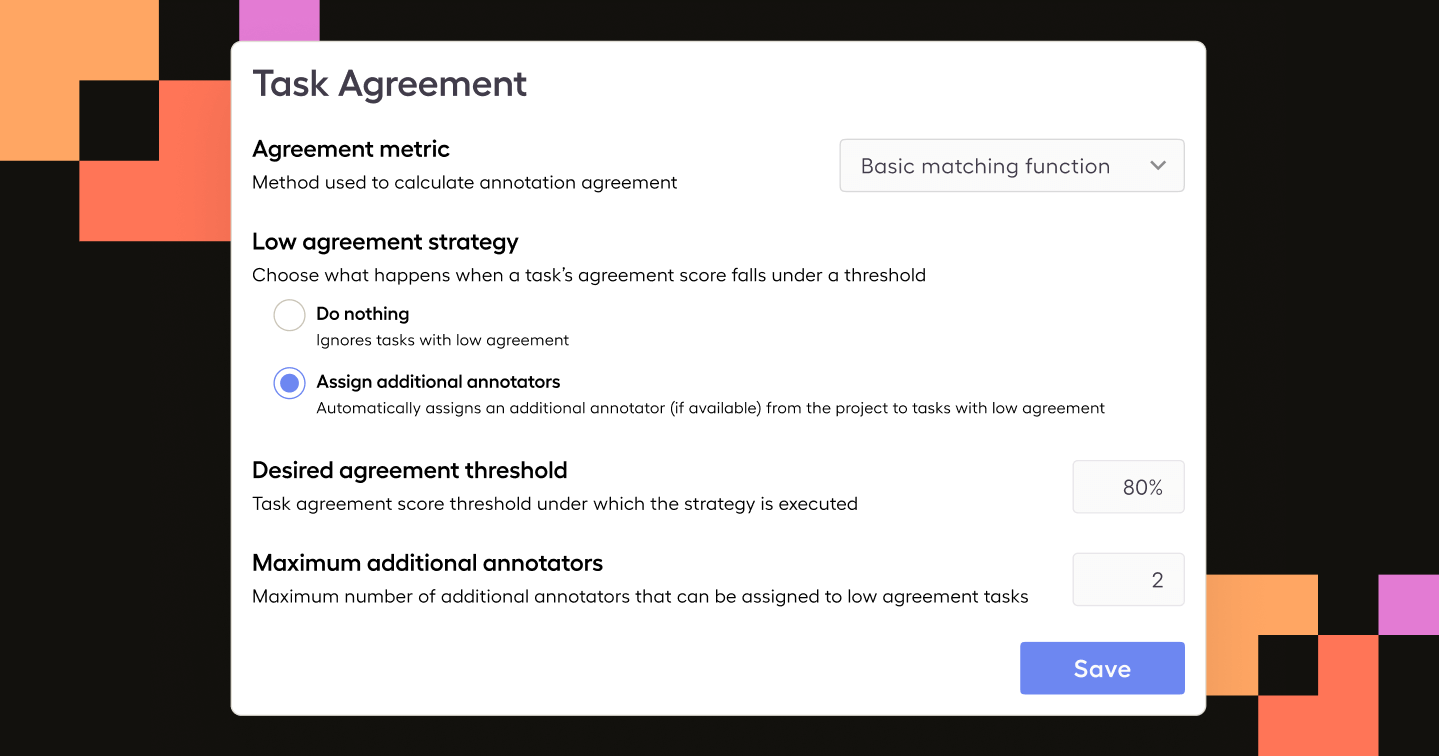

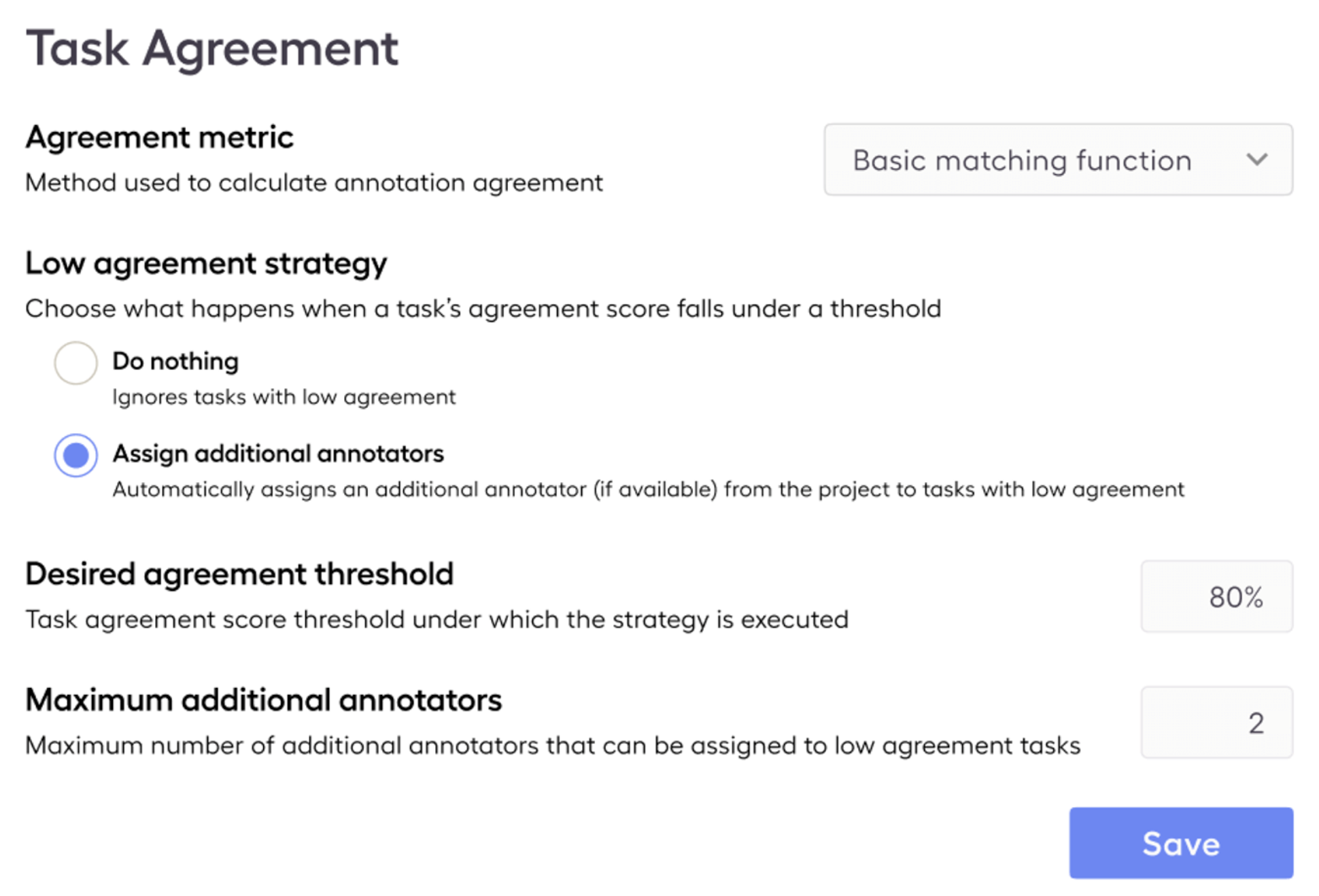

On the other hand, low agreement is a strong signal of an item that needs further review. Starting today, you can not only see when there's low agreement—you can take action. Label Studio can now automatically assign and alert additional annotators any time agreement on a particular labeling task falls below your set threshold.

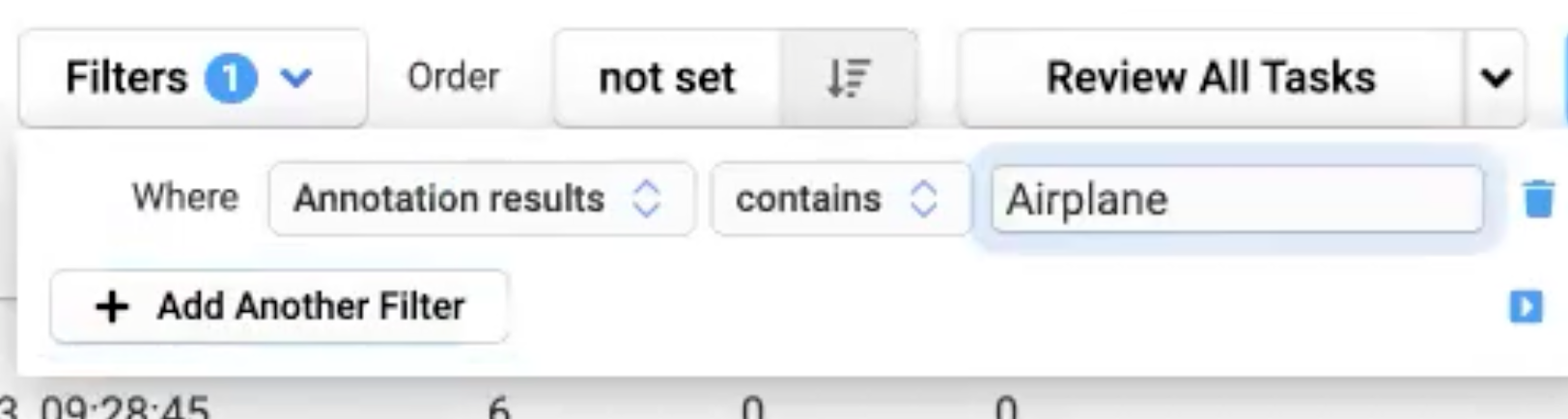

Already inside of Label Studio, Project managers and owners can create tabs with smart filters to keep an eye on the labeling process.

Let's filter down to a known problem category—say your model struggles with identifying airplanes in images. Now we can sort the results to spot check either the most recent annotations or display only the ones with multiple annotations and low agreement scores.

Your team is in complete control of what agreement ultimately means—this is one area where Label Studio is really powerful. Depending on the type of labeling that you perform, you can select the metric to use to calculate the agreement score and Label Studio Enterprise customers can even write functions to define custom metrics.

Above is an example of a task with low agreement. The ideal workflow would escalate a task like this to additional SMEs for further review. Before today, that would require manually emailing other team members to take a look. Now it can be entirely automated.

First set your agreement threshold. Here let's use the precision of the bounding boxes as our agreement metric. Now if the annotators on this task highlight significantly different areas of an image when asked to label airplanes, we'll know to include additional annotators for further review.

That's it! Additional annotators will now be alerted to weigh in with their expertise. They won't have to search out these instances—they'll arrive automatically in their notifications

Today's release is a simple but powerful example of all the new automations you'll see coming to Label Studio. We can't wait to show you the amazingly powerful features we have in store to speed up your labeling workflows. We're just getting started.If you’re interested in seeing a live demonstration of this feature, along with a walkthrough of our overall quality workflow, register for our upcoming webinar Build Accurate Datasets With Label Studio Automated Quality Workflows. We look forward to seeing you there!