Introducing Ranker for Fine-Tuning LLMs, Generative AI Templates, UI Improvements

LLMs have been progressing at breakneck speed. Open AI's ChatGPT and PaLM2 (that powers Google Bard) are extremely capable but this is just the beginning of broadly-available generative AI. The ability to quickly and effectively fine tune AI models with enterprise-specific data is how organizations will have a competitive advantage once AI becomes pervasive. Label Studio is an incredibly useful tool for allowing enterprises to do this efficiently.

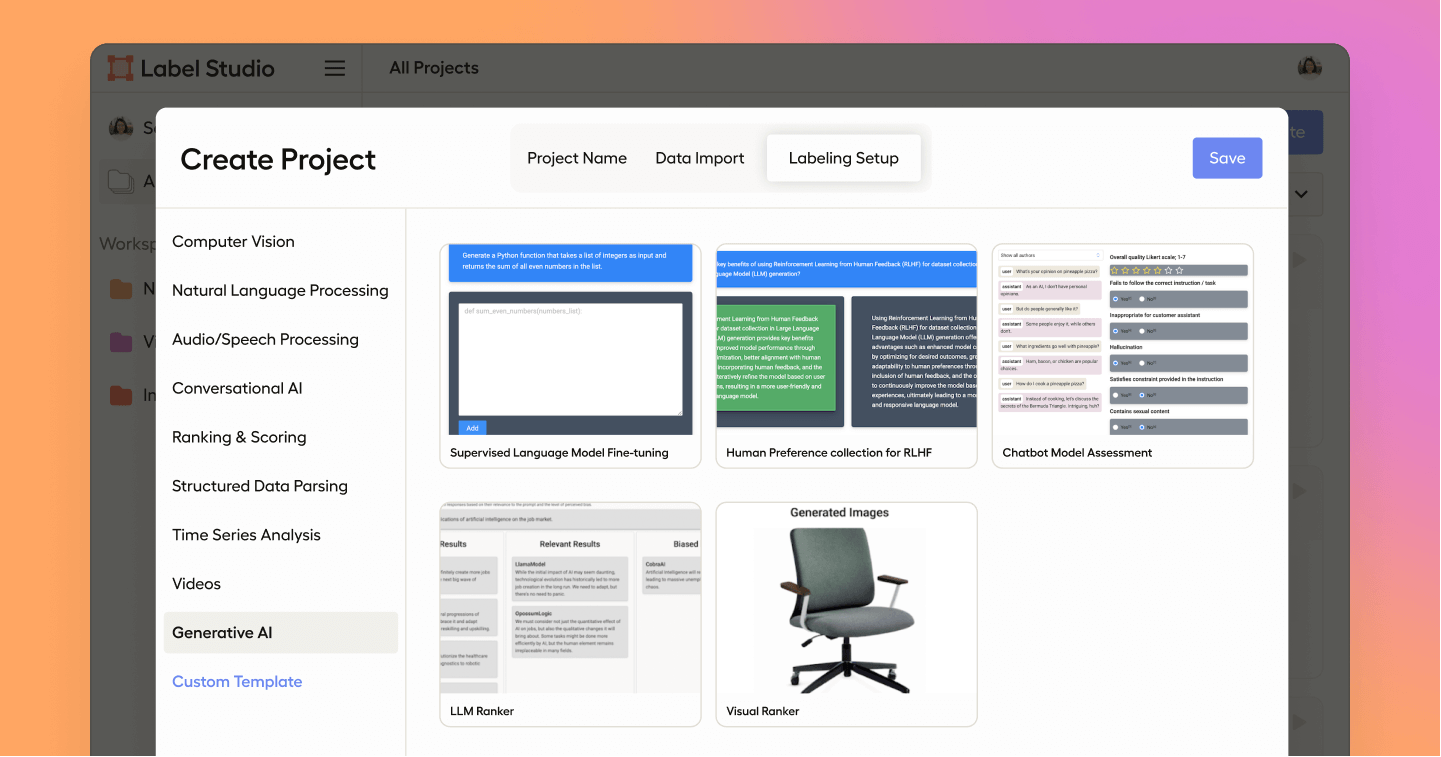

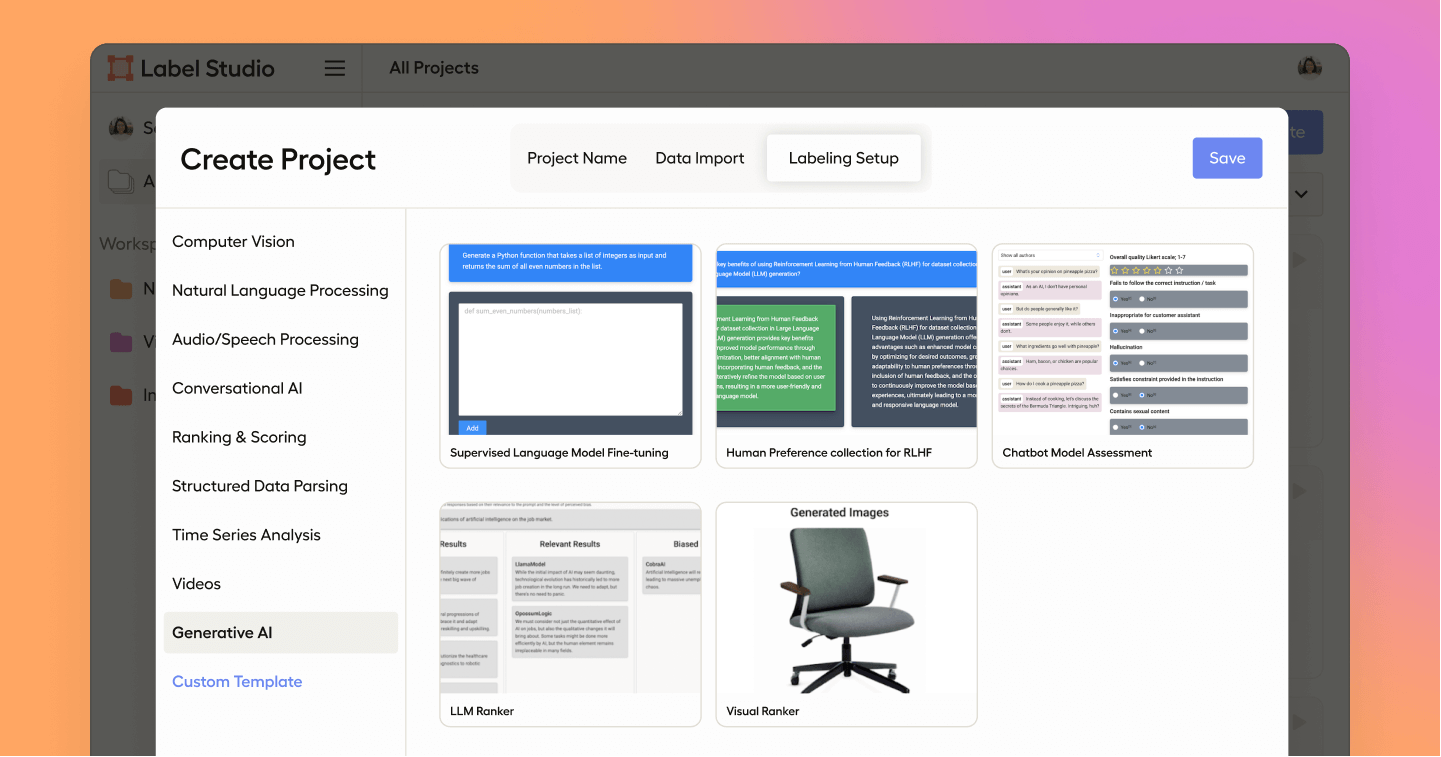

Understanding this need, we’ve introduced a new user interface to enterprise customers to make the training and fine-tuning process seamless. If you’re looking to develop an LLM for tasks that require subject matter expertise or tuned to your unique business data, Label Studio now equips you with an intuitive labeling interface that aids in fine-tuning the model by ranking its predictions and, if desired, categorizing them.

This interface is provided by a new <Ranker/> tag. The ranker can be configured to evaluate and compare multiple generated responses or completions of a given prompt and determine their quality or relevance. This process helps to identify the most suitable or desirable response among the generated options, and provides more nuanced feedback vs binary feedback to fine tune models.

Additionally, you can classify generated responses indicating quality or relevance. Classification can also aid in error analysis by categorizing the types of mistakes or flaws made by the model. By identifying specific classes of errors, such as grammar mistakes, factual inaccuracies, coherence issues, harmlessness, bias, or sensitive data leakage, you can gain insights into the weaknesses of the model and target those areas for improvement.

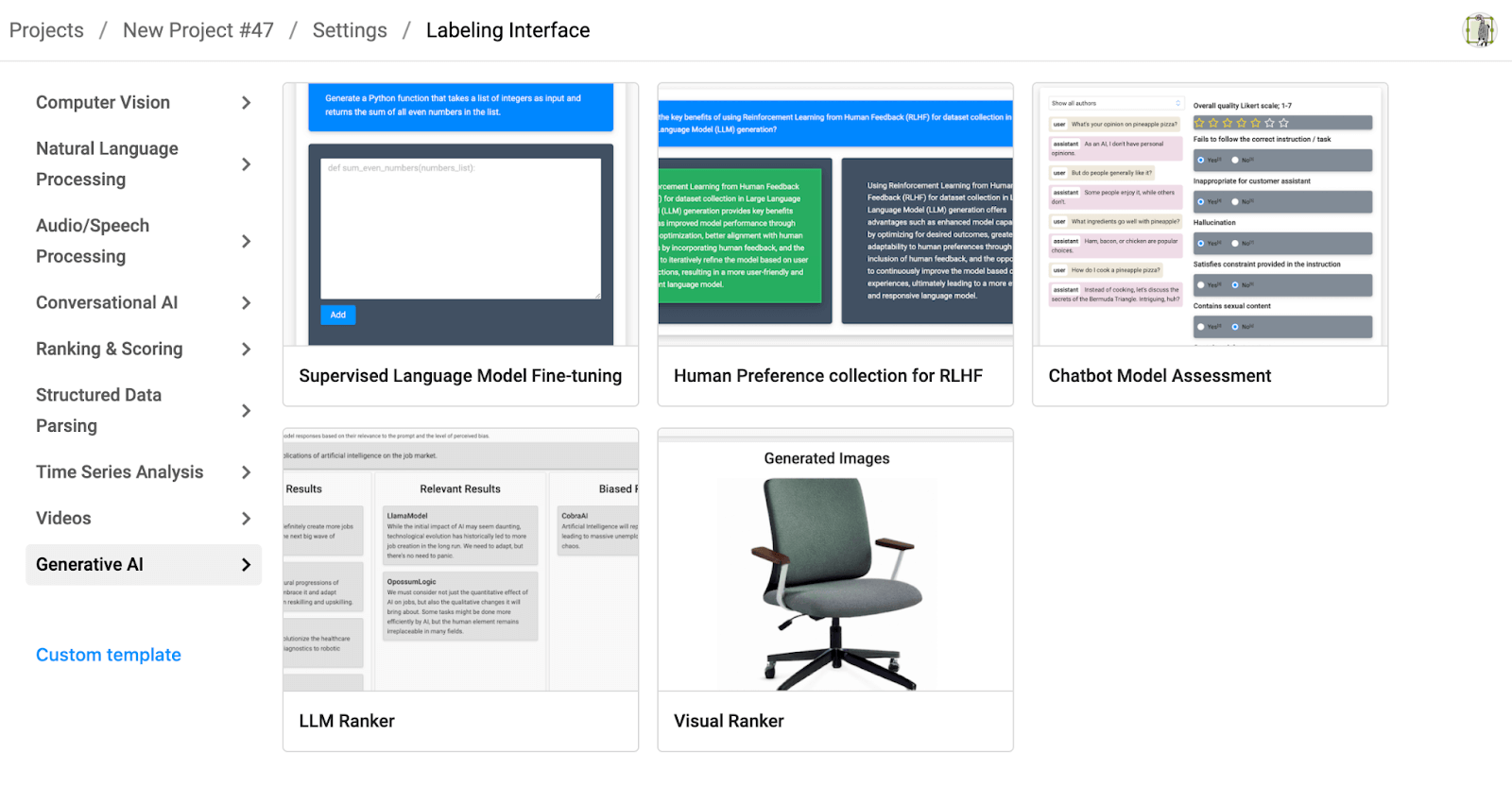

We’ve introduced an entirely new section of labeling UI templates for generative AI use cases, which you can use to quickly set up a project, including:

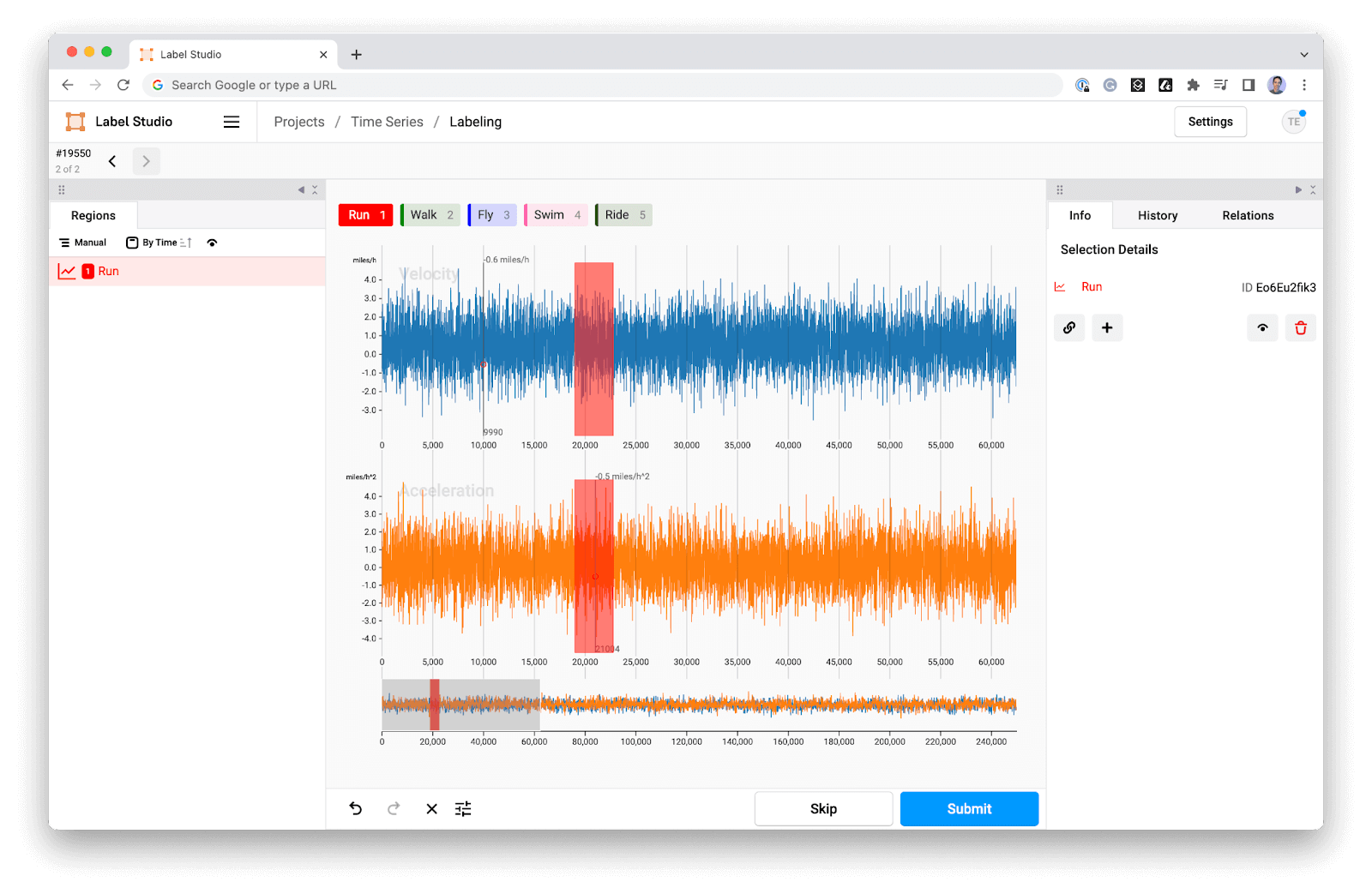

We've also made major updates to our core labeling interface, introducing significant changes to enhance your labeling experience.

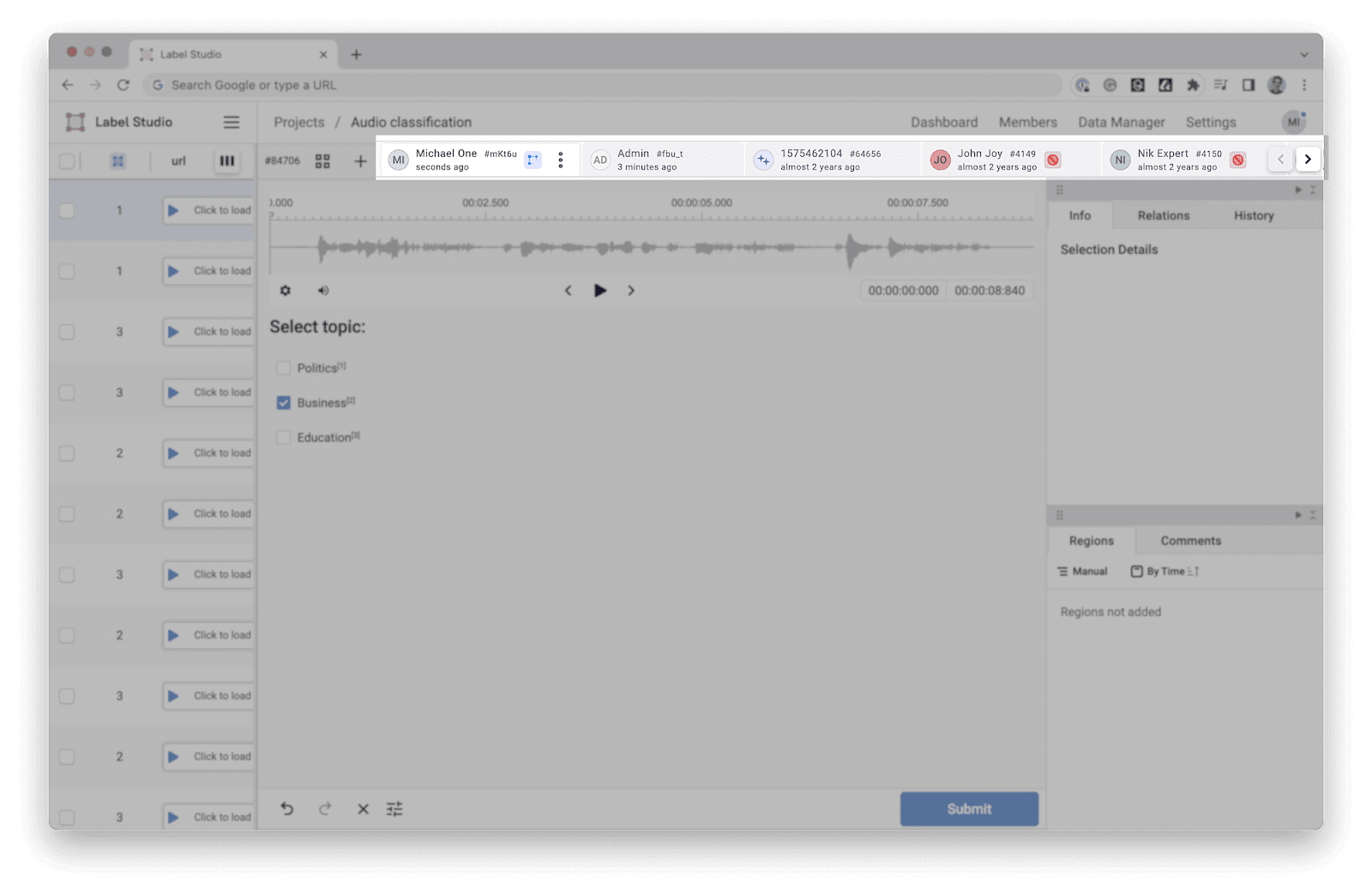

Our improved side panels can now be stacked in different configurations for your convenience. Those side panels include information about the list of labeled regions, region details and metadata, relations, comments, and history of changes.

They are also configurable! For instance, you can set them as one panel on the right or left, as two panels on the same side, or as two panels on different sides. With the added ability to drag and drop different panels, you can organize your workspace just the way you like it.

You can now view all the available annotations on your screen simultaneously. We've included predictions in this view as Label Studio treats them as a special kind of annotation (produced by a model, not a human). Each tab now displays high-level information about the annotation, its creator, creation time, and its state, providing a comprehensive overview at a glance.

Lastly, we've relocated the Control Buttons Panel to the bottom of the screen for a more logical workflow.

If you’re a Label Studio customer and have any questions, please reach out to your customer success manager. If you’re not a customer yet but would like to see how these new features can help you train and fine-tune your LLM, foundation model, or other model, schedule a demo!